ProPublica is an independent, nonprofit newsroom that produces investigative journalism. Their team includes more than 100 investigative journalists and even more support staff for online publishing.

ProPublica is a fully online journalism resource. As part of a major website upgrade, all content needed to be migrated and verified. This included thousands of published articles, metadata, categorizations, and workflows.

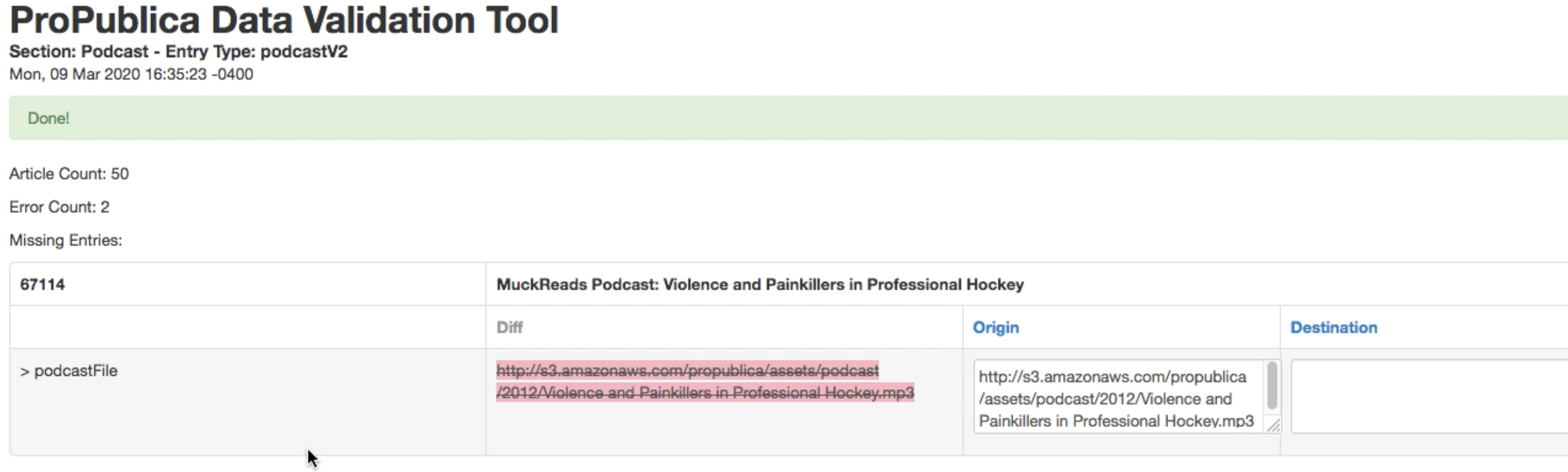

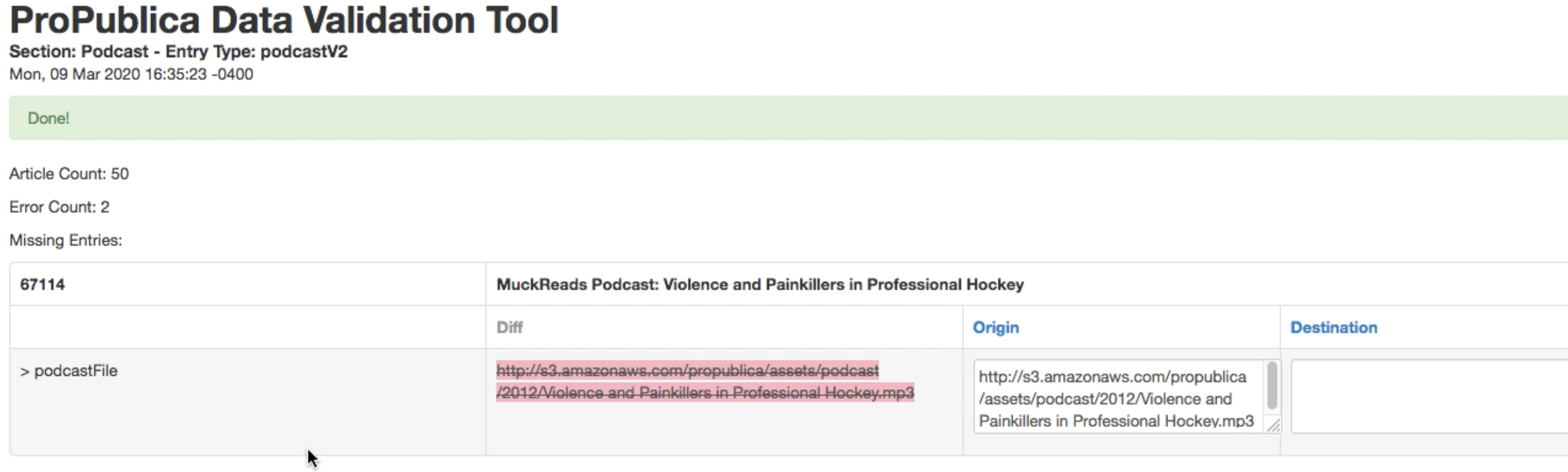

We built a new quasi-AI powered content validation tool that helped one human scale herself up to validate millions of chunks of data.

ProPublica's website consists of more than 30,000 stories. Each story had more than 70 data attributes. More than 2m items of data needed to be verified.

We conceived a custom tool and used it to make sure that the data transfer was reliable and stable up to and even after launch.

We reduced the content validation process down from a months-long process to one that took just minutes.